Had no chance to do a debug log yet…

Clients are set to autoupgrade. So it should be 2.0.18 to 19.

Clients run Windows 7 64Bit and Windows 8.1 64Bit

The double icon on OSX is back again.

Server 2.0.21 [Debian 8.3] Client 2.0.19 [Windows 7]

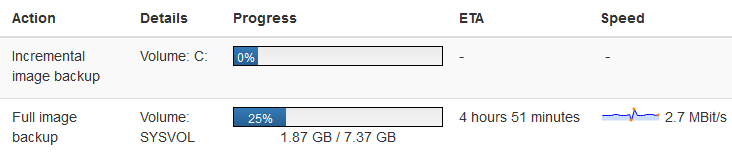

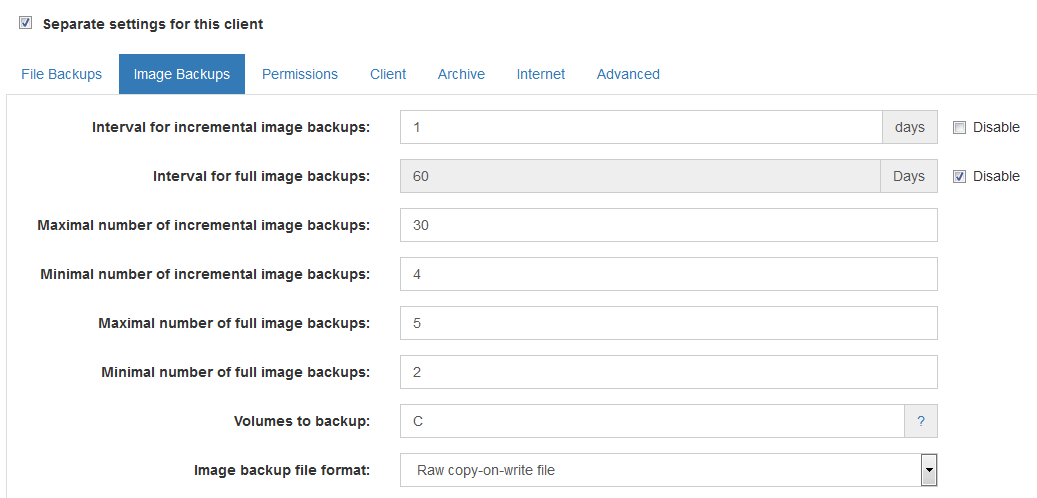

Only issue I’m running into is the backing up of the sysvol partition. Some PCs have large sysvols for some reason (maybe an OEM thing?) and every time an incremental image backup is started, a full backup of the sysvol partition is being made. Not an issue for most PCs, but the oddball systems are a problem. I’ve tried to exclude backing up the sysvol partition but it is still backing it up. Other than attempting to shrink the sysvol partition, is there any way to do an incremental on the sysvol or skip it altogether?

Otherwise, 2.0.21 is working well!

Config : Debian 8.4 / UrBackup 2.0.21 / Dedicated Btrfs Storage 6TB

On my UrBackup server, cleanup session is very long, starting near 13:00 today and still on the “checking database intergrity” at 20:30 !

Does somebody experiments such delays ?

Here’s my /var/urbackup contents :

root@urbackup:~# ll -h /var/urbackup/

total 25G

-rw-r--r-- 1 urbackup urbackup 29 mai 16 22:16 backupfolder

-rw-r--r-- 1 urbackup urbackup 7,6G mai 19 09:29 backup_server.db

-rw-r--r-- 1 urbackup urbackup 64K mai 19 16:29 backup_server.db-shm

-rw-r--r-- 1 urbackup urbackup 25M mai 19 16:29 backup_server.db-wal

-rw-r--r-- 1 urbackup urbackup 17G mai 19 16:29 backup_server_files.db

-rw-r--r-- 1 urbackup urbackup 12M mai 19 17:36 backup_server_files.db-shm

-rw-r--r-- 1 urbackup urbackup 92K mai 19 16:29 backup_server_files.db-wal

-rw-r--r-- 1 urbackup urbackup 50M mai 18 12:04 backup_server_link_journal.db

-rw-r--r-- 1 urbackup urbackup 32K mai 18 20:56 backup_server_link_journal.db-shm

-rw-r--r-- 1 urbackup urbackup 1,1K mai 18 12:04 backup_server_link_journal.db-wal

-rw-r--r-- 1 urbackup urbackup 4,0K mai 13 10:33 backup_server_links.db

-rw-r--r-- 1 urbackup urbackup 32K mai 18 12:04 backup_server_links.db-shm

-rw-r--r-- 1 urbackup urbackup 0 mai 18 12:04 backup_server_links.db-wal

-rw-r--r-- 1 urbackup urbackup 157K mai 19 09:29 backup_server_settings.db

-rw-r--r-- 1 urbackup urbackup 32K mai 19 20:26 backup_server_settings.db-shm

-rw-r--r-- 1 urbackup urbackup 195K mai 19 20:26 backup_server_settings.db-wal

-rwxr-x--- 1 urbackup urbackup 4,9M mai 13 00:20 clientlist_b_238.ub

-rwxr-x--- 1 urbackup urbackup 6,2M mai 13 00:31 clientlist_b_239.ub

-rwxr-x--- 1 urbackup urbackup 16M mai 13 03:58 clientlist_b_242.ub

-rwxr-x--- 1 urbackup urbackup 53M mai 13 16:03 clientlist_b_249.ub

-rwxr-x--- 1 urbackup urbackup 1,2M mai 18 22:44 clientlist_b_287.ub

-rwxr-x--- 1 urbackup urbackup 4,9M mai 19 00:06 clientlist_b_288.ub

-rwxr-x--- 1 urbackup urbackup 6,3M mai 19 00:13 clientlist_b_289.ub

-rwxr-x--- 1 urbackup urbackup 11M mai 19 02:01 clientlist_b_290.ub

-rwxr-x--- 1 urbackup urbackup 16M mai 19 04:13 clientlist_b_291.ub

-rwxr-x--- 1 urbackup urbackup 19M mai 19 02:28 clientlist_b_292.ub

-rwxr-x--- 1 urbackup urbackup 21M mai 19 03:26 clientlist_b_293.ub

-rwxr-x--- 1 urbackup urbackup 23M mai 19 03:10 clientlist_b_294.ub

-rwxr-x--- 1 urbackup urbackup 29M mai 19 03:42 clientlist_b_295.ub

-rwxr-x--- 1 urbackup urbackup 30M mai 19 03:08 clientlist_b_296.ub

-rwxr-x--- 1 urbackup urbackup 34M mai 19 04:16 clientlist_b_297.ub

-rwxr-x--- 1 urbackup urbackup 19M mai 19 04:54 clientlist_b_298.ub

-rwxr-x--- 1 urbackup urbackup 15M avril 25 23:57 clientlist_b_41.ub

-rwxr-x--- 1 urbackup urbackup 19M avril 26 23:49 clientlist_b_56.ub

-rwxr-x--- 1 urbackup urbackup 28M avril 27 00:45 clientlist_b_59.ub

-rwxr-x--- 1 urbackup urbackup 15M avril 27 07:39 clientlist_b_62.ub

-rwxr-x--- 1 urbackup urbackup 0 avril 27 22:40 clientlist_b_75.ub

-rwxr-x--- 1 urbackup urbackup 0 avril 28 00:00 clientlist_b_84.ub

-rwxr-x--- 1 urbackup urbackup 0 avril 28 19:41 clientlist_b_86.ub

drwxr-x--- 2 urbackup urbackup 4,0K mars 29 14:56 fileindex

-rw-r--r-- 1 urbackup urbackup 391 mars 29 14:56 server_ident_ecdsa409k1.priv

-rw-r--r-- 1 urbackup urbackup 436 mars 29 14:56 server_ident_ecdsa409k1.pub

-rw-r--r-- 1 urbackup urbackup 23 mars 29 14:56 server_ident.key

-rw-r--r-- 1 urbackup urbackup 334 mars 29 14:56 server_ident.priv

-rw-r--r-- 1 urbackup urbackup 442 mars 29 14:56 server_ident.pub

-rw-r--r-- 1 urbackup urbackup 20 mars 29 14:56 server_token.key

-rwxr-x--- 1 urbackup urbackup 0 mai 9 12:48 server_version_info.properties

-rw-r--r-- 1 urbackup urbackup 31M mai 11 22:18 UrBackupUpdate.exe

-rw-r--r-- 1 urbackup urbackup 9,5M mai 11 22:19 UrBackupUpdateLinux.sh

-rw-r--r-- 1 urbackup urbackup 102 mai 11 22:20 UrBackupUpdateLinux.sig2

-rw-r--r-- 1 urbackup urbackup 40 mai 11 22:20 UrBackupUpdate.sig

-rw-r--r-- 1 urbackup urbackup 102 mai 11 22:12 UrBackupUpdate.sig2

-rw-r--r-- 1 urbackup urbackup 3 mai 11 22:23 version.txt

root@urbackup:~# ll -h /var/urbackup/fileindex/

total 651M

-rw-r--r-- 1 urbackup urbackup 651M mai 19 16:28 backup_server_files_index.lmdb

-rw-r--r-- 1 urbackup urbackup 256K mai 19 16:27 backup_server_files_index.lmdb-lock

root@urbackup:~#@Greg

Will be done, but I want to only fix bugs with 2.0.x at this point.

@TomTomGo

With larger installations you should think about disabling the internal backup and using something else. E.g. installing the client on the server. You’d need to use one of the Linux snapshot machanisms to backup the database.

Internal backup of the database is not very long, the longest step is the cleaning phase which takes few hours … Is there a way to make it faster ?

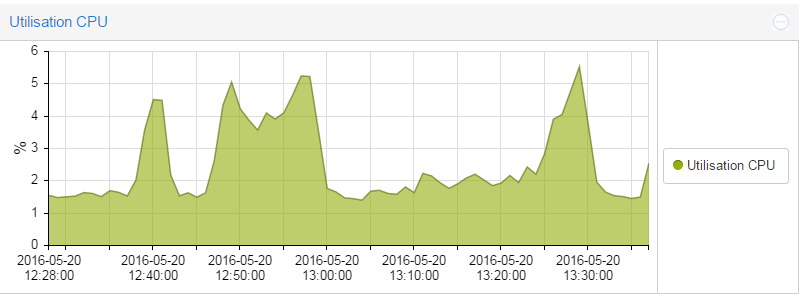

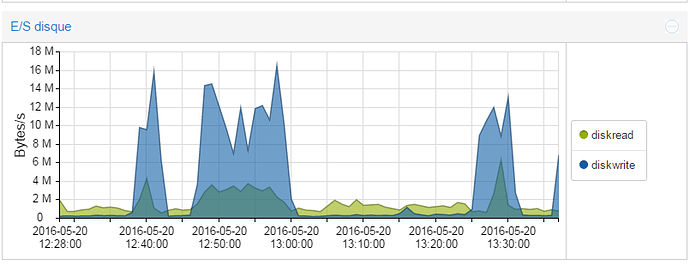

Is it a disk or cpu bottleneck?

Just like Greg, I’m seeing these on 2.0.21 and 2.0.19 [windows 7 pro]. However, my client is only at 70% complete:

05/19/16 19:37 DEBUG Loading "urbackup/FILE_METADATA|8EM9s5LxiyGiLXMUMTZs|873". Loaded 283.551 KB at 0 Bit/s 05/19/16 19:38 DEBUG Loading "urbackup/FILE_METADATA|8EM9s5LxiyGiLXMUMTZs|873". Loaded 283.552 KB at 0 Bit/s 05/19/16 19:39 DEBUG Loading "urbackup/FILE_METADATA|8EM9s5LxiyGiLXMUMTZs|873". Loaded 283.553 KB at 0 Bit/s 05/19/16 19:40 DEBUG Loading "urbackup/FILE_METADATA|8EM9s5LxiyGiLXMUMTZs|873". Loaded 283.554 KB at 0 Bit/s 05/19/16 19:41 DEBUG Loading "urbackup/FILE_METADATA|8EM9s5LxiyGiLXMUMTZs|873". Loaded 283.555 KB at 0 Bit/s 05/19/16 19:42 DEBUG Loading "urbackup/FILE_METADATA|8EM9s5LxiyGiLXMUMTZs|873". Loaded 283.556 KB at 0 Bit/s 05/19/16 19:43 DEBUG Loading "urbackup/FILE_METADATA|8EM9s5LxiyGiLXMUMTZs|873". Loaded 283.557 KB at 0 Bit/s 05/19/16 19:44 DEBUG Loading "urbackup/FILE_METADATA|8EM9s5LxiyGiLXMUMTZs|873". Loaded 283.558 KB at 0 Bit/s 05/19/16 19:45 DEBUG Loading "urbackup/FILE_METADATA|8EM9s5LxiyGiLXMUMTZs|873". Loaded 283.559 KB at 0 Bit/s 05/19/16 19:46 DEBUG Loading "urbackup/FILE_METADATA|8EM9s5LxiyGiLXMUMTZs|873". Loaded 283.56 KB at 0 Bit/s 05/19/16 19:47 DEBUG Loading "urbackup/FILE_METADATA|8EM9s5LxiyGiLXMUMTZs|873". Loaded 283.561 KB at 0 Bit/s 05/19/16 19:48 DEBUG Loading "urbackup/FILE_METADATA|8EM9s5LxiyGiLXMUMTZs|873". Loaded 283.562 KB at 0 Bit/s 05/19/16 19:49 DEBUG Loading "urbackup/FILE_METADATA|8EM9s5LxiyGiLXMUMTZs|873". Loaded 283.562 KB at 0 Bit/s 05/19/16 19:50 DEBUG Loading "urbackup/FILE_METADATA|8EM9s5LxiyGiLXMUMTZs|873". Loaded 283.563 KB at 0 Bit/s 05/19/16 19:51 DEBUG Loading "urbackup/FILE_METADATA|8EM9s5LxiyGiLXMUMTZs|873". Loaded 283.564 KB at 0 Bit/s 05/19/16 19:52 DEBUG Loading "urbackup/FILE_METADATA|8EM9s5LxiyGiLXMUMTZs|873". Loaded 283.565 KB at 0 Bit/s 05/19/16 19:53 DEBUG Loading "urbackup/FILE_METADATA|8EM9s5LxiyGiLXMUMTZs|873". Loaded 283.566 KB at 0 Bit/s

Yes, this happens when it tries to remove a snapshot and thinks the snapshot is still in use.

Is it stuck in this state for more than one hour?

Can you create a client debug log and/or a memory dump of UrBackupClientBackend.exe ?

In most cases it is limited by random disk IO. I.e. the tps column in iostat.

You also have to look at if the backup storage or the database storage is the bottleneck. If it is the same putting the database on an ssd would be the first optimization step.

Database and Backups are stored on 2 separate virtual disks, 60 Gb (/dev/vda) for system and 6 Tb (/dev/vdb) for BTRFS backup storage.

Virtual disks are stored on a NFS shared storage (Qnap NAS box) with 256 Gb R/W SSD cache enabled.

IOstat in VM shows :

root@urbackup:~# iostat -m -d 30 /dev/vda /dev/vdb

Linux 4.5.0-0.bpo.1-amd64 (urbackup) 20/05/2016 _x86_64_ (4 CPU)

Device: tps MB_read/s MB_wrtn/s MB_read MB_wrtn

vda 131,56 0,99 0,52 279221 145136

vdb 139,32 0,88 3,47 247770 974818

Device: tps MB_read/s MB_wrtn/s MB_read MB_wrtn

vda 138,67 0,96 0,42 28 12

vdb 0,00 0,00 0,00 0 0

Device: tps MB_read/s MB_wrtn/s MB_read MB_wrtn

vda 154,23 1,15 0,19 34 5

vdb 0,00 0,00 0,00 0 0

Device: tps MB_read/s MB_wrtn/s MB_read MB_wrtn

vda 139,33 0,90 0,08 27 2

vdb 0,00 0,00 0,00 0 0

Device: tps MB_read/s MB_wrtn/s MB_read MB_wrtn

vda 120,87 0,70 0,13 21 3

vdb 0,00 0,00 0,00 0 0

Device: tps MB_read/s MB_wrtn/s MB_read MB_wrtn

vda 87,90 0,53 0,89 15 26

vdb 0,00 0,00 0,00 0 0Spikes on the Disk Activity in my precedent post occurs when backups are deleted from the backup storage (/dev/vdb) :

Device: tps MB_read/s MB_wrtn/s MB_read MB_wrtn

vda 56,17 0,30 0,02 8 0

vdb 718,13 3,63 12,27 108 368

Device: tps MB_read/s MB_wrtn/s MB_read MB_wrtn

vda 88,93 0,50 0,14 14 4

vdb 596,53 4,92 7,83 147 234

Device: tps MB_read/s MB_wrtn/s MB_read MB_wrtn

vda 55,60 0,36 0,10 10 3

vdb 505,83 3,15 7,35 94 220

Device: tps MB_read/s MB_wrtn/s MB_read MB_wrtn

vda 45,07 0,26 0,02 7 0

vdb 676,30 3,07 11,77 92 353

Device: tps MB_read/s MB_wrtn/s MB_read MB_wrtn

vda 54,23 0,38 0,11 11 3

vdb 694,47 3,29 12,35 98 370

Device: tps MB_read/s MB_wrtn/s MB_read MB_wrtn

vda 43,60 0,24 0,11 7 3

vdb 656,70 3,12 11,62 93 348

Device: tps MB_read/s MB_wrtn/s MB_read MB_wrtn

vda 53,60 0,30 0,08 9 2

vdb 709,03 3,20 11,85 95 355Putting the system disk on SSD is not possible for the moment unfortunately …

Is it possible to apply/guarantee 60GB worth of SSD caching for the database drive to ensure the 6TB drive isn’t consuming all of the cache? If that isn’t possible, maybe take the performance hit on the 6TB drive by only caching the 60GB drive (if possible)…

I agree that using SSD on the database is the way to go considering how large the database is…

Yes i agree, i will try with OS + database on SSD ASAP to see if there is a big improvement …

BTW, do you plan the ability to make file level restore from image backups from the GUI ? It will be a great feature for people (like me) who are using UrBackup for backuping virtual machines …

With a such feature, we could only use image backups for both disater recovery (restore full VMs) or file level restore (file inside a vm).

Thanks

@Uroni - thanks for continuing to work on UrBackup and improve it. We all really appreciate it. Do you have an idea of when 2.0.x will be out of beta?

I upgraded UrBackup Server from 2.0.5 to 2.0.21. When I logged into the web console, I am greeted with this message:

The directory where UrBackup will save backups is inaccessible. Please fix that by modifying this folder in ‘Settings’ or by giving UrBackup rights to access this directory. (err_cannot_create_symbolic_links). The file or directory is not a reparse point. (code: 4390)

UrBackup cannot create symbolic links on the backup storage. Your backup storage must support symbolic links in order for UrBackup to work correctly. The UrBackup Server must run as administrative user on Windows. Note: As of 2016-05-07 samba has not implemented the necessary functionality to support symbolic link creation from Windows.

I went into Windows services and changed the logon user to my admin user and restarted service. I still get the same error message that my backup location is inaccessible. It was just working fine with 2.0.5 before upgrade. Am I missing a simple step?

Update #1: I decided to manually run a backup job on a client to see what would happen. The backup is currently running and I can see the new/updated files on my network share. So, I’m not sure how on one hand it thinks “the directory where UrBackup will save backups is inaccessible” and on the other hand it is clearly writing files to it?

Update #2: The backup job says it succeeded, but I just noticed all these kinds of errors in the urbackup.log file:

ERROR: Error opening file '\FileNAS\Backups\volt\160511-2034_Image_C\Image_C_160511-2034.vhdz’

2016-05-22 07:56:55: ERROR: Error opening file '\FileNAS\Backups\VS-ADFS\160511-2328_Image_SYSVOL\Image_SYSVOL_160511-2328.vhdz’

2016-05-22 07:56:55: ERROR: Error opening file '\FileNAS\Backups\VS-ADFS\160511-2328_Image_C\Image_C_160511-2328.vhdz’

2016-05-22 07:56:55: ERROR: Error opening file '\FileNAS\Backups\Chevy\160512-0129_Image_SYSVOL\Image_SYSVOL_160512-0129.vhdz’

2016-05-22 07:56:55: ERROR: Error opening file '\FileNAS\Backups\Chevy\160512-0129_Image_C\Image_C_160512-0129.vhdz’

2016-05-22 07:56:55: ERROR: Error opening file '\FileNAS\Backups\HOST-BRSD\160512-1842_Image_SYSVOL\Image_SYSVOL_160512-1842.vhdz’

2016-05-22 07:56:55: ERROR: Error opening file '\FileNAS\Backups\HOST-BRSD\160512-1842_Image_C\Image_C_160512-1842.vhdz’

2016-05-22 07:56:55: ERROR: Error opening file '\FileNAS\Backups\DC2\160512-1853_Image_SYSVOL\Image_SYSVOL_160512-1853.vhdz’

2016-05-22 07:56:55: ERROR: Error opening file '\FileNAS\Backups\DC2\160512-1853_Image_C\Image_C_160512-1853.vhdz’

2016-05-22 07:56:55: ERROR: Error opening file '\FileNAS\Backups\TESTINGSERVER\160513-0306_Image_SYSVOL\Image_SYSVOL_160513-0306.vhdz’

2016-05-22 07:56:55: ERROR: Error opening file '\FileNAS\Backups\TESTINGSERVER\160513-0306_Image_C\Image_C_160513-0306.vhdz’

2016-05-22 10:56:56: ERROR: Creating junction failed. Last error=4390

2016-05-22 10:56:56: ERROR: Creating symbolic link from “\?\UNC\FileNAS\Backups\testfolderHvfgh—dFFoeRRRf_link” to “\?\UNC\FileNAS\Backups\testfolderHvfgh—dFFoeRRRf” failed with error 4390

2016-05-22 10:57:20: ERROR: Creating junction failed. Last error=4390

2016-05-22 10:57:20: ERROR: Creating symbolic link from “\?\UNC\FileNAS\Backups\testfolderHvfgh—dFFoeRRRf_link” to “\?\UNC\FileNAS\Backups\testfolderHvfgh—dFFoeRRRf” failed with error 4390

You are the second one that is confused by this diagnostic message. I guess this will come up often in the future. Does anyone have hints how I can improve this?

For starters I will remove the first sentence, because it is wrong in this context.

Can you explain what this diagnostic message means? And do I need to do anything to fix whatever might be wrong? Is there anything wrong? I’m guessing all those ERRORs in the log aren’t good, right? Thanks again.