Hi,

Our test environnement is made of a Qnap TS 239 Pro II+ (Atom D525@1,8 Ghz Dual Core / 1 Gb RAM / 2 Western Digital Re 2TB in soft RAID1) and Debian 8 replacing the Qnap firmware (/boot on the 512 Mb DOM, other partitions on disks), backported btrfs 4.7 and kernel 4.7 installed, BTRFS backend :

root@backup-3:~# cat /proc/version

Linux version 4.7.0-0.bpo.1-amd64 (debian-kernel@lists.debian.org) (gcc version 4.9.2 (Debian 4.9.2-10) ) #1 SMP Debian 4.7.8-1~bpo8+1 (2016-10-19)

root@backup-3:~# df -h ; btrfs fi df /media/56af2dc3-28fa-4e96-bc1d-3561039ecc62/ ; du -hs /media/56af2dc3-28fa-4e96-bc1d-3561039ecc62/

Sys. de fichiers Taille Utilisé Dispo Uti% Monté sur

udev 10M 0 10M 0% /dev

tmpfs 198M 21M 177M 11% /run

/dev/md0 9,1G 2,6G 6,0G 31% /

tmpfs 494M 0 494M 0% /dev/shm

tmpfs 5,0M 0 5,0M 0% /run/lock

tmpfs 494M 0 494M 0% /sys/fs/cgroup

tmpfs 494M 60K 494M 1% /tmp

/dev/sdb1 475M 60M 391M 14% /boot

/dev/md2 1,9T 735G 1,1T 40% /media/56af2dc3-28fa-4e96-bc1d-3561039ecc62

tmpfs 99M 0 99M 0% /run/user/0

Data, single: total=721.00GiB, used=720.22GiB

System, DUP: total=32.00MiB, used=112.00KiB

Metadata, DUP: total=8.00GiB, used=6.82GiB

GlobalReserve, single: total=512.00MiB, used=0.00B

9,1T /media/56af2dc3-28fa-4e96-bc1d-3561039ecc62/

root@backup-3:~# btrfs version

btrfs-progs v4.7.3

root@backup-3:~#

We only do image backups, and this works fine.

Disks info :

root@backup-3:~# hdparm -i /dev/sda /dev/sdc

/dev/sda:

Model=WDC WD2000FYYZ-01UL1B2, FwRev=01.01K03, SerialNo=WD-WCC1PN6ZYZ63

Config={ HardSect NotMFM HdSw>15uSec SpinMotCtl Fixed DTR>5Mbs FmtGapReq }

RawCHS=16383/16/63, TrkSize=0, SectSize=0, ECCbytes=0

BuffType=unknown, BuffSize=unknown, MaxMultSect=16, MultSect=off

CurCHS=16383/16/63, CurSects=16514064, LBA=yes, LBAsects=3907029168

IORDY=on/off, tPIO={min:120,w/IORDY:120}, tDMA={min:120,rec:120}

PIO modes: pio0 pio3 pio4

DMA modes: mdma0 mdma1 mdma2

UDMA modes: udma0 udma1 udma2 udma3 udma4 udma5 *udma6

AdvancedPM=yes: unknown setting WriteCache=enabled

Drive conforms to: Unspecified: ATA/ATAPI-1,2,3,4,5,6,7

* signifies the current active mode

/dev/sdc:

Model=WDC WD2000FYYZ-01UL1B2, FwRev=01.01K03, SerialNo=WD-WCC1PC6AFPJY

Config={ HardSect NotMFM HdSw>15uSec SpinMotCtl Fixed DTR>5Mbs FmtGapReq }

RawCHS=16383/16/63, TrkSize=0, SectSize=0, ECCbytes=0

BuffType=unknown, BuffSize=unknown, MaxMultSect=16, MultSect=off

CurCHS=16383/16/63, CurSects=16514064, LBA=yes, LBAsects=3907029168

IORDY=on/off, tPIO={min:120,w/IORDY:120}, tDMA={min:120,rec:120}

PIO modes: pio0 pio3 pio4

DMA modes: mdma0 mdma1 mdma2

UDMA modes: udma0 udma1 udma2 udma3 udma4 udma5 *udma6

AdvancedPM=yes: unknown setting WriteCache=enabled

Drive conforms to: Unspecified: ATA/ATAPI-1,2,3,4,5,6,7

* signifies the current active mode

root@backup-3:~#

Partitioning info :

root@backup-3:~# fdisk -l /dev/sda /dev/sdc

Disque /dev/sda : 1,8 TiB, 2000398934016 octets, 3907029168 secteurs

Unités : secteur de 1 × 512 = 512 octets

Taille de secteur (logique / physique) : 512 octets / 512 octets

taille d'E/S (minimale / optimale) : 512 octets / 512 octets

Type d'étiquette de disque : gpt

Identifiant de disque : DACDCC98-A4C5-4A37-A4F1-1FB81433EC6D

Device Start End Sectors Size Type

/dev/sda1 2048 19531775 19529728 9,3G Linux RAID

/dev/sda2 19531776 23437311 3905536 1,9G Linux RAID

/dev/sda3 23437312 3907028991 3883591680 1,8T Linux RAID

Disque /dev/sdc : 1,8 TiB, 2000398934016 octets, 3907029168 secteurs

Unités : secteur de 1 × 512 = 512 octets

Taille de secteur (logique / physique) : 512 octets / 512 octets

taille d'E/S (minimale / optimale) : 512 octets / 512 octets

Type d'étiquette de disque : gpt

Identifiant de disque : 264CAF6D-8F4C-462A-9116-2CDCA1678A28

Device Start End Sectors Size Type

/dev/sdc1 2048 19531775 19529728 9,3G Linux RAID

/dev/sdc2 19531776 23437311 3905536 1,9G Linux RAID

/dev/sdc3 23437312 3907028991 3883591680 1,8T Linux RAID

root@backup-3:~#

Mount options :

# >>> [openmediavault]

UUID=56af2dc3-28fa-4e96-bc1d-3561039ecc62 /media/56af2dc3-28fa-4e96-bc1d-3561039ecc62 btrfs defaults,nofail,compress-force=zlib,enospc_debug 0 2

# <<< [openmediavault]

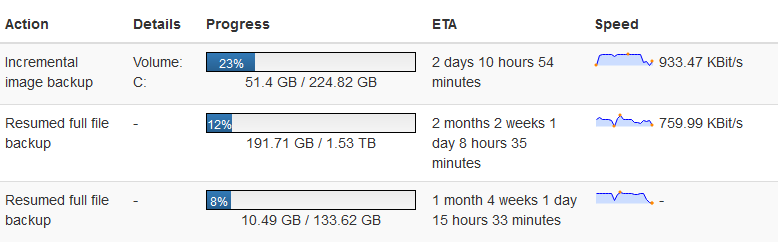

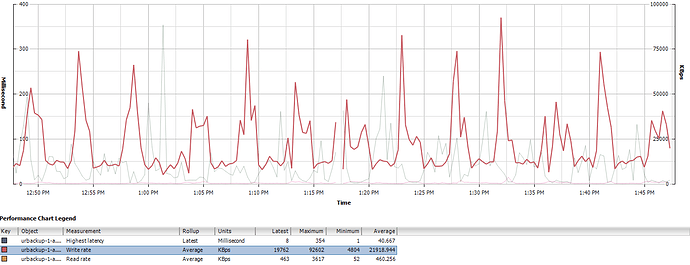

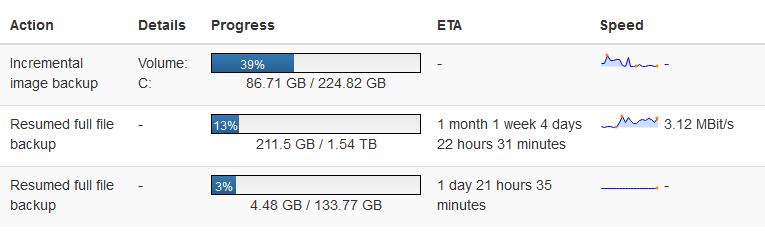

Since we go on production on similar more recent hardware (Qnap TS 251 / Celeron J1800@2,4Ghz / 1 Gb RAM / 2 Seagate 6TB ST6000VN0021 / soft RAID1 / BTRFS), we’ve notice a very high CPU I/O wait rate compared to the test platform, i.e about 90~95% IO wait and ~20 of load average !

And backups are much, much slower.

Production platform :

root@backup-1:~# cat /proc/version

Linux version 4.7.0-0.bpo.1-amd64 (debian-kernel@lists.debian.org) (gcc version 4.9.2 (Debian 4.9.2-10) ) #1 SMP Debian 4.7.8-1~bpo8+1 (2016-10-19)

root@backup-1:~#btrfs version

btrfs-progs v4.7.3

root@backup-1:~# df -h ; btrfs fi df /media/259a6324-0c5f-4826-beb0-e77430ba0966/ ; du -hs /media/259a6324-0c5f-4826-beb0-e77430ba0966/

Sys. de fichiers Taille Utilisé Dispo Uti% Monté sur

udev 10M 0 10M 0% /dev

tmpfs 178M 22M 157M 12% /run

/dev/md0 19G 2,8G 15G 17% /

tmpfs 444M 0 444M 0% /dev/shm

tmpfs 5,0M 0 5,0M 0% /run/lock

tmpfs 444M 0 444M 0% /sys/fs/cgroup

tmpfs 444M 72K 444M 1% /tmp

/dev/sdc1 475M 60M 391M 14% /boot

/dev/md2 5,5T 1,3T 4,3T 23% /media/259a6324-0c5f-4826-beb0-e77430ba0966

tmpfs 89M 0 89M 0% /run/user/0

Data, single: total=1.24TiB, used=1.21TiB

System, DUP: total=8.00MiB, used=160.00KiB

System, single: total=4.00MiB, used=0.00B

Metadata, DUP: total=13.00GiB, used=11.16GiB

Metadata, single: total=8.00MiB, used=0.00B

GlobalReserve, single: total=512.00MiB, used=33.58MiB

11T /media/259a6324-0c5f-4826-beb0-e77430ba0966/

root@backup-1:~#

Disks info :

root@backup-1:~# hdparm -i /dev/sda /dev/sdb

/dev/sda:

Model=ST6000VN0021-1Z811C, FwRev=SC60, SerialNo=ZA108LPR

Config={ HardSect NotMFM HdSw>15uSec Fixed DTR>10Mbs RotSpdTol>.5% }

RawCHS=16383/16/63, TrkSize=0, SectSize=0, ECCbytes=0

BuffType=unknown, BuffSize=unknown, MaxMultSect=16, MultSect=off

CurCHS=16383/16/63, CurSects=16514064, LBA=yes, LBAsects=11721045168

IORDY=on/off, tPIO={min:120,w/IORDY:120}, tDMA={min:120,rec:120}

PIO modes: pio0 pio1 pio2 pio3 pio4

DMA modes: mdma0 mdma1 mdma2

UDMA modes: udma0 udma1 udma2 udma3 udma4 udma5 *udma6

AdvancedPM=no WriteCache=enabled

Drive conforms to: unknown: ATA/ATAPI-4,5,6,7

* signifies the current active mode

/dev/sdb:

Model=ST6000VN0021-1Z811C, FwRev=SC60, SerialNo=ZA107TF3

Config={ HardSect NotMFM HdSw>15uSec Fixed DTR>10Mbs RotSpdTol>.5% }

RawCHS=16383/16/63, TrkSize=0, SectSize=0, ECCbytes=0

BuffType=unknown, BuffSize=unknown, MaxMultSect=16, MultSect=off

CurCHS=16383/16/63, CurSects=16514064, LBA=yes, LBAsects=11721045168

IORDY=on/off, tPIO={min:120,w/IORDY:120}, tDMA={min:120,rec:120}

PIO modes: pio0 pio1 pio2 pio3 pio4

DMA modes: mdma0 mdma1 mdma2

UDMA modes: udma0 udma1 udma2 udma3 udma4 udma5 *udma6

AdvancedPM=no WriteCache=enabled

Drive conforms to: unknown: ATA/ATAPI-4,5,6,7

* signifies the current active mode

root@backup-1:~#

Partitionning info :

root@backup-1:~# fdisk -l /dev/sda /dev/sdb

Disque /dev/sda : 5,5 TiB, 6001175126016 octets, 11721045168 secteurs

Unités : secteur de 1 × 512 = 512 octets

Taille de secteur (logique / physique) : 512 octets / 4096 octets

taille d'E/S (minimale / optimale) : 4096 octets / 4096 octets

Type d'étiquette de disque : gpt

Identifiant de disque : 5011813B-C24C-4DCA-AD91-0B16D4309280

Device Start End Sectors Size Type

/dev/sda1 2048 39063551 39061504 18,6G Linux RAID

/dev/sda2 39063552 42969087 3905536 1,9G Linux RAID

/dev/sda3 42969088 11721043967 11678074880 5,4T Linux RAID

Disque /dev/sdb : 5,5 TiB, 6001175126016 octets, 11721045168 secteurs

Unités : secteur de 1 × 512 = 512 octets

Taille de secteur (logique / physique) : 512 octets / 4096 octets

taille d'E/S (minimale / optimale) : 4096 octets / 4096 octets

Type d'étiquette de disque : gpt

Identifiant de disque : 42AD109E-23A3-47D2-8D4D-0E5AC99D08F9

Device Start End Sectors Size Type

/dev/sdb1 2048 39063551 39061504 18,6G Linux RAID

/dev/sdb2 39063552 42969087 3905536 1,9G Linux RAID

/dev/sdb3 42969088 11721043967 11678074880 5,4T Linux RAID

root@backup-1:~#

Mount options :

# >>> [openmediavault]

UUID=aaaf8b05-4c27-4948-802b-acce40b9a67e /media/aaaf8b05-4c27-4948-802b-acce40b9a67e btrfs defaults,nofail,compress-force=zlib,enospc_debug 0 2

# <<< [openmediavault]

RAID is clean

root@backup-1:~# cat /proc/mdstat

Personalities : [raid1]

md2 : active raid1 sda3[0] sdb3[1]

5838906368 blocks super 1.2 [2/2] [UU]

bitmap: 0/44 pages [0KB], 65536KB chunk

md1 : active raid1 sda2[0] sdb2[1]

1951744 blocks super 1.2 [2/2] [UU]

md0 : active raid1 sda1[0] sdb1[1]

19514368 blocks super 1.2 [2/2] [UU]

unused devices: <none>

root@backup-1:~#

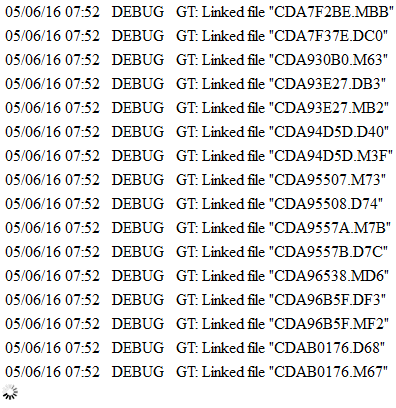

After a lot of testing (swapping disks beetween NAS boxes, logging, googling, …) i came to the conclusion of a hardware related problem : the disks.

ST6000 are “Advanced Format Disks” (sector size = 4096b) and WD2000 are’nt (sector size = 512b).

Have somebody ever had some problems with this type of disks, Advanced Format ? How could we optimize this setup ?

Thanks.

Regards,

![]()