Hi there,

I’m new to UrBackup but it came highly recommended from a colleague and so far, I’m very impressed!

I’d like to echo the desire for importing/seeding/exporting backups from a remote location to a central one, or from a central location to a remote one. See the bottom of this message for others requesting a similar feature set.

Our situation is this: We have about 30 sites we’d like to back up to a centralized server and storage array that live in a central data center. The bandwidth to the data center is limited to 100 Mbps up and down, and each site is running somewhere between 1-5 Mbps upstream. So I’m limited to that 1-5 Mbps

to send a backup through, and I’m limited to sending these backups between 6 PM and 7 AM the following morning. In some cases, the window is smaller than that because they run a 2nd shift until 12 midnight. So, if each site has between 250 GB and 1 TB of data to send to the centralized server, it could be months before the first full backup is done. This simply isn’t acceptable.

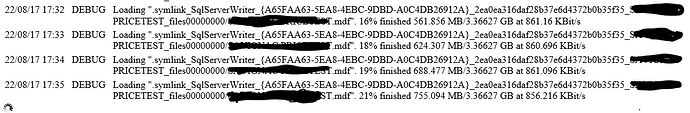

However, if I took a micro-workstation with a 3 TB drive loaded with UrBackup Server to each site and did the initial backup over a weekend to that, I could then transport that workstation to the datacenter and upload the data into the storage enclosure. I then set up the server at each site as an “internet” client and I use the suggested “import” option to import the full backup into the database. Suddenly the amount of data I need to send over the internet nightly is small and manageable.

There are cloud based services that offer this option, but it is tedious. For instance… without naming company names…

If I need to seed a full backup, the one we currently subscribe to has us ship them a SATA hard drive with the initial image on it, and they charge us $200-$500 (USD) for the import. If I want my drive back, I have to send them a prepaid return label in the box with the drive, and they keep the drive for a week or more before the import is online. Occasionally I’m told the backup is “invalid” and I have to repeat the process.

If I need a full restore from them because a server has a catastrophic failure, I have to pay them $250 for a hard drive + $200 for the restore to the drive + overnight shipping. All in all, this is $500 per incidence to either send them a lot of data or receive a lot of data back, and I have a location that sends workers home for 2 days unpaid because they cannot operate. So this means not only do we lose 2 days of production and angry customers, we have workers angry that they cannot work and that lose 2 days of pay. In the US they can submit this to unemployment and that’s another set of nightmares for the worker and for the company.

When the network monitoring softare notifies me that a site is down, I could remote into the server using iDRAC or iLO and diagnose the problem remotely and then run to the centralized data center, export the last full backup from UrBackup to the micro-workstation, run it to the site that is down, and restore the data. It may take me 12 hours and be a long day for me, but I just saved an entire day for the company and the workers.

For those wondering, the last time this happened it was a RAID controller that went bad, and the identical replacement RAID controller refused to import the old array configuration. So my server is down and when I get the replacement part from the server manufacturer (4 hour turnaround) the RAID array had to be rebuilt. Then I needed to do a full restore, so I’m waiting for the drive to arrive from [offsite cloud backup vendor]. When it arrived the following day I did a full restore of the data (6 hours). So the site was down for two days.

Additionally, I’d love to see a parent/child UrBackup architecture. This way a site could have a local backup as well as the centralized backup. Others have suggested ways to make this happen where you internet back up a UrBackup Server as a client and that seems like a great idea. My additional suggestion is that the child server be able to pass backup status information to the parent server, so you can see on one screen all the sites and all the servers, and their last backup information.

Lastly, hats off to the UrBackup Development team. I’m genuinely impressed with the product!

As a new user it won’t let me post these links (says no more than 2 links, even though there are only 2 links here)… but here’s what comes after the base URL:

/t/first-seeding/161

/t/import-backups-from-another-server-site/1936