I’ve started using urbackup around 2 months ago, and am quite fond of its image backup implementation and great performance for incremental file backups.

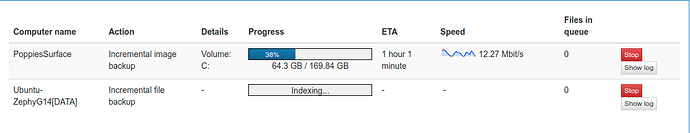

Unfortunately I started noticing degraded performance, especially when doing raw copy-on-write image backups. Below you can find a screenshot of the “Activities” tab, were I’m running an incremental/full image backup for a new windows client without CBT. (One of the 3 Windows Clients actually has CBT tracking enabled)

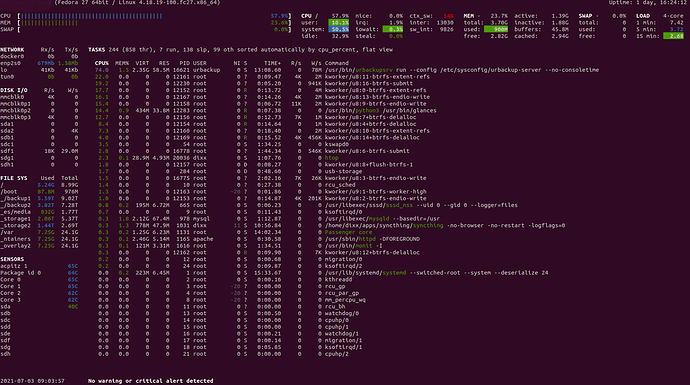

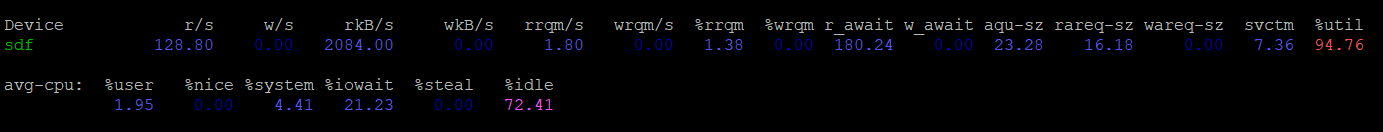

When I started using urbackup I would fully saturate my 100MB/s connection to the NAS… Now, as you can see, performance is varying hugely and image backups take forever. I’m using a headless fedora 27 box, so I am not sure how I could best monitor what is going on on the disk (it is certainly being trashed from what I can hear). Using iotop -o is not very helpful, as output changes a lot; attached you can find the top reported activities by iotop (in this case, there is actually writes going on at 124MB/s, but oftentimes it’s in the KB/s range).

iotop.txt (2.4 KB)

This is a single 8TB DM-SMR Drive (External USB: Seagate ST8000DM004); the server itself is also a very low-power Intel NUC (NUC6CAYH) with 4gb of ram. I don’t expect incredible performance out of this, but the disk trashing seems a bit excessive.

I mounted the drive with the following parameters:

LABEL=8TB_BTRFS_BU /drives/backup2 btrfs defaults,compress-force=zstd 0 0

The system kernel is 4.18.19-100.fc27.x86_64 and uses btrfs-progs v4.17.1.

I wonder if these are already sings of fragmentation on the disk? What could be causing the trashing? Let me know if you need more information to answer my question(s).

P.S.: I realize there is a similar topic here: BTRFS very high iowait (even if stop urbackup service) Please help!

But my scope is much, MUCH smaller. As I said, I have 4 clients. 3 Windows, 1 Linux and I don’t see high io-wait.

I do infinite incremental image backups on all windows clients (one daily, one every three days and one only each week) and incremental file backups on Linux (every 2 hours).

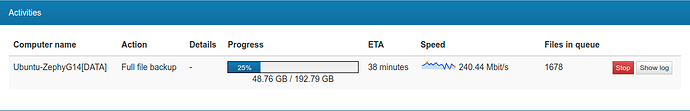

Edit 2: Okay, nevermind. I do indeed run into high io-wait after a while:

Could it be an issue with the SMR drive’s cache filling up?