Same here

Can you tell me which kind of distribution + Linux kernel version? Thanks!

It surely happened on: Debian 8 (stock kernel: 3.16.0-4-amd64)

Ubuntu 14.04: 3.13.0-77-generic

Centos 7: 3.10.0-327.4.5.el7.x86_64

Yes, I have many combinations here, that’s why I’m doing so many tests.

Hello.

I’m new to this nice thing that called “urbackup”, and i’m starting to love it

I’v installed this beta version (2.04) and i have question:

when the backup activity (first full backup) stopped suddnely because of unplanned server shutdown (where the urbackup server installed) the backup process won’t start from the last place where it stopped. it will begin a new full backup directory… is it right? or there is something to do with it?

thanks in advance… Great Work!!

Hi Uroni, something else I noticed, but I don’t have logs to back my story up.

First point:

I have a machine with symbolic links in the backup location that points to very large folders (time machine backup folders) outside the backup location. Even with the symbolic links set as excluded in the backup location settings, the indexing will try to index the very large time machine Backup folders. For some reason the indexing never finished. The only way to get it to backup was to delete the symbolic links

Second point:

after the initial full file backup, I recreated the link, thinking the issue might only have been with the initial file indexing. I let urbackup do its think for a couple of days, until I got warnings my backup space was low. For some reason, I think that the attempted backup of the time machine folders caused the server to write large amounts of temporary files.

I realise this is not much information to go on, especially point 2. Point 1 is pretty clear though I hope.

Thanks for this brilliant backup system !

Yes that is by design. The server is assumed to not shut down suddenly so regularily that it makes sense optimizing for that case.

Is that time machine link always there? If yes we should compile in a exclusion for that…

There is no such setting in UrBackup 2.0.x. If you are using the client with server 1.4.x you should upgrade as 1.4.x doesn’t backup the file metadata and has only rudimentary symlink handling.

With UrBackup 2.0.x server+client you can disable following symlinks by flags to backup directories.

I.e. if you set no flags the default flags are follow_symlinks,symlinks_optional,share_hashes

So if you do not want to follow symlinks outside of the backup dir you set e.g. default directories to backup to /|root/symlinks_optional,share_hashes (or add /symlinks_optional,share_hashes to name in the select directories dialog). I’ll have to add this to the GUI at some point.

Dear Mr. Uroni,

is there a function for the client that provide the possibility to shut down the computer when the backup is finished? If no, have you planned to develope it?

Best regards.

Hi Stefan,

I’m no expert but there is an option to use the postfilebackup.bat to run a shutdown script after backup completes.

Hi Uroni,

I’m having a similar problem to Draga on Windows Clients to Windows server 2012 server I haven’t checked the logs yet but I will the next time I pick up the problem. in short it seams if clients and server are restarted backups run fine but after a couple of backups clients seem to hang (not all of them/and not always the same ones) during indexing or even at certain percentages of completion during backups (and they never complete). In some instances I’ve just restarted the server and when the clients come back online they finish their backups without a problem but in most instances I need to restart the client and restart the backup process for it to finish.

Its pretty much a daily occurrence so I will get some logs for you

I have a strange problem.

Some files are getting backuped endless (VDI File from VBOX, Firebird database)

seems like urbackup server does not recognize the end of the file and keeps writing data (0x00) without end to the backup storage

Seems you are the first with backup storage that does not suport sparse files and the fallback is broken? What OS and storage do you use?

This will be fixed with the next version. Thanks for the hint!

Server OS: Debian 8

Storage: QNAP TS412 via NFS Mount.

Temp: standard EXT4

PS: DATTO BD runs absolutly fine on debian too  had to compile from sources tough

had to compile from sources tough

Ok, seems NFS does not support hole punching. They are working on NFS 4.2 which has support for it.

You’d want to have that installed via dkms, tough, so that it automatically builds for kernel upgrades. If someone does the release management for the Debian packages, I’d gladly add them. Should be easy – taking the Ubuntu packages and building them for the Debians (maybe even with https://build.opensuse.org ).

Hi Uroni,

Ok I have some feedback on my issue it seams it could be during the server clean up window that this is occurring this morning I had 4 clients stuck either indexing or during backups and when I checked the time this occurred it was all around the same time in the middle of the server clean up window.

I’ve changed the server clean up window to be outside of the backup window now and will do some more tests to see if this resolves the issue.

some background of my setup

My backup window is from 24-7 daily and the clean up window was from 3-4 daily and every client would need a daily clean up of data as I’m only allowing 6 incr backups and every client runs daily backups over the internet. Its also worth mentioning I’m making use of Windows Compressed folders for the backup data.

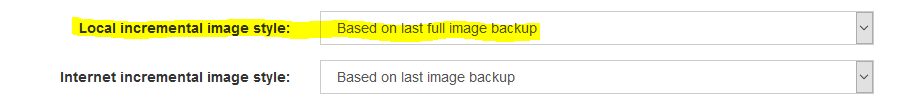

Hi, I’m having some trouble with the local incremental image backup mode setting:

If I change it to based on last image and save, it reverts back to last full image. If I then change it again and save, it appears to stay but if I then navigate away and come back to check, it has reverted back to full image.

I’ve successfully recreated this on another machine.

Hi,

Just an update on the error I was having it was definitely linked to the cleanup window as since changing this two days without errors.

was just strange because the setup was the same with 1.14.x and never had this issue.

Hi,

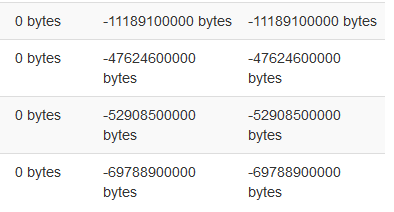

Ok I have an error on the web interface some of my clients under statistics are showing negative sizes

If you have any logs with regards to the two issues, that would be appreciated.

Thanks for the hint! Will be fixed.

Hello,

Im new here, I just installed my first UrBackup Server yesterday on a HP ProLiant MicroServer AMD NEO N54L, with 10GB ram, 1 x 250GB OS HDD + 2 x 2TB hdd mounted in /media/backup/ with Btrfs.

I use only internet backup, and have setup nginx as webserver with SSL, but because i didn’t make fastcgi work, I have chosen to just proxy requests to 127.0.0.1:55414.

I’m running in beta on both server and client, because im testing UrBackup if it will fit my needs, so as long I don’t use it for production yet, im good with there might be problems.

For now I have seen following:

On my Windows 10 client, and a remote user I have seen following problem.

My windows 10 was running all night, indexing, and in morning still not finished, I found that Eset Endpoint Security, but also Eset Smart Security 9, is having some conflicts with UrBackup client, haven’t found out what yet.

But when in disable realtime scanning, and disabled Calculate file-hashes on the client then UrBackup client started to backup withing 1 hour.

webui doesn’t fit so great on my Nexus 5 phone in status page, any way to make this better?

And now I have som questions.

What happends if user decide to shutdown / reboot pc while doing backup?

How much will UrBackup transfer over internet, from my remote client in future backups, will a full backup transfer the whole backup again next week?

Do you think that sometime in future that it would be possible to make backup from Android?

Btw. I saw here in forum a feature request for grouping client, I would also like to vote for that.

If someone would like a copy of my nginx vhost, please tell me.